The

Campaign Against Sex Robots launched to

much media fanfare back in September. The brainchild of

Dr. Kathleen Richardson from De Montfort University in Leicester UK, and

Dr. Erik Brilling from University of Skovde in Sweden, the campaign aims to highlight the ways in which the development of sex robots could be ‘

potentially harmful and will contribute to inequalities in society’. What’s more, despite being a relative newcomer, the campaign may have already achieved its first significant ‘scalp’. The

2nd International Conference on Love and Sex with Robots, organised by sex robot pioneer David Levy was due to be held in Malaysia this month (November 2015) but

was cancelled by Malaysian authorities shortly after the campaign was launched.

Now, to be sure, it’s difficult to claim a direct causal relationship between the campaign and the cancellation of the conference, but there is no doubting the media success of the campaign: it has been featured in major newspapers, weblogs and TV shows around the world. Most recently, Dr Richardson participated in a panel at the Web Summit conference in Dublin, and this was

discussed in the national media here in Ireland. Furthermore, the actions of the Malaysian authorities suggest that there is the potential for the campaign to gain some traction.

And yet, I find the Campaign Against Sex Robots somewhat bizarre. I’m puzzled by the media attention being given to it, especially since the ethics and psychology of human-robot relationships (including sexual relationships) has been a topic of serious inquiry for many years. And I’m also puzzled about the position of the campaign and the arguments its proponents proffer. I say this as someone with a bit of form in this area. I have

written previously about the potential impact of sex robots on the traditional (human) sex work industry;

I have also written about the case for legal bans of certain types of sex robot; and, with

my friend and colleague Neil McArthur, I am currently co-editing a collection of essays on the legal, ethical and social implications of sex robots for MIT Press. So I am not unsympathetic to the kinds of issues being raised. But I cannot see what the campaign is driving at.

In this post, I want to provide some support for my puzzlement by analysing the goals of the campaign and the ‘position paper’ it has published in support of these goals. I want to make two main arguments: (i) the goals of the campaign are insufficiently clear and much of its media success may be trading on this lack of clarity; and (ii) the reasons proffered in support of the campaign are either unpersuasive or insufficiently strong to merit a ‘campaign’ against sex robots. I appreciate that

others have done some of this critical work before. My goal is to do so in a more thorough way.

(

Note: this post is long -- far longer than I originally envisaged. If you want to just get the gist of my criticisms, I suggest reading section one and the conclusion, and then having a look at the argument diagrams.)

1. What are the goals of the campaign against sex robots?

Let me start with a prediction: sex robots will become a reality. I say this with some confidence. I am not usually prone to making predictions about the future development of technology. I think people who make such predictions are routinely proved wrong, and hence forced into some awkward backtracking and self-amendment. Nevertheless, I feel pretty sure about this one. My confidence stems from two main sources: (i) history suggests that sex and technology have always gone together, hence if there is to be a revolution in robotics it is likely to include the development of sex robots; and (ii)

sex robots already exist (in primitive and unsophisticated forms) and there are several companies actively trying to develop more sophisticated versions (

perhaps most notably Real Doll). In making this prediction, I won't make specific claims about the likely form or degree of intelligence that will be associated with these sex robots. But I’m still sure they will exist.

Granting this, it seems to me that there are three stances one can take towards the existence of such robots:

Liberation: i.e. adopt a libertarian attitude towards the creation and deployment of such robots. Allow manufacturers to make them however they see fit, and sell or share them with whoever wants them.

Regulation: i.e. adopt a middle-of-the-road attitude towards the creation and deployment of such robots. Perhaps regulate and restrict the manufacture and/or sale of some types; insist upon certain standards for consumer/social protection for others; but do not implement an outright ban.

Criminalisation: i.e. adopt a restrictive attitude towards the creation and deployment of such robots. Ban their use and manufacture, and possibly seek criminal sanctions for those who breach the terms of those bans (such sanctions need not include incarceration or other forms of harsh treatment).

These three stances define a spectrum. At one end, you have extreme forms of liberation, which would enthusiastically welcome any and all sex robots; and at the other end you would have extreme forms of criminalisation, which would ban any and all sex robots. The great grey middle of ‘regulation’ lies in between.

For what it is worth, I favour a middle-of-the-road attitude. I think there could be some benefits to sex robots, and some problems. On balance, I would lean in favour of liberation for most types of sex robots, but might favour strict regulation or, indeed, restrictions, for other types. For instance, I previously wrote an article suggesting that sex robots used for rape fantasies and shaped like children could be plausibly criminalised. I did not strongly endorse that argument (it rested on a certain moralistic view of the criminal law that I dislike); I did not favour harsh punishment for potential offenders; and I would never claim that this policy would be successful in actually preventing the development or use of such technologies. But that’s not the point: we often criminalise things we never expect to prevent. I was also clear that the argument I made was weak and vulnerable to several potential

defeaters. My goal in presenting it was not to defend a particular stance, but rather to map out the terrain for future ethical debate.

Anyway, leaving my own views to the side, the question arises: where on this spectrum do the proponents of the Campaign Against Sex Robots fall?

The answer is unclear. Obviously, they are not in favour of liberation, but are they are in favour of regulation or criminalisation? The naming of the campaign suggests something more towards the latter: they are

against sex robots. And some of their pronouncements seem to reinforce this more extreme position. For instance, on their

‘About’ page, they say that “an organized approach against the development of sex robots is necessary”. On

the same page, they also list a number of relatively unqualified objections to the development of sex robots. These include:

We believe the development of sex robots further sexually objectifies women.

We propose that the development of sex robots will further reduce human empathy that can only be developed by an experience of mutual relationship.

We challenge the view that the development of adult and child sex robots will have a positive benefit to society, but instead further reinforce power relations of inequality and violence.

On top of this, in her

‘position paper’, Richardson notes how she is modeling her campaign on the

‘Stop Killer Robots’ campaign. That campaign works to completely ban autonomous robots with lethal capabilities. If Richardson means for that model to be taken seriously, it suggests a similarly restrictive attitude motivates the Campaign Against Sex Robots.

But despite all this, there is some noticeable equivocation and hedging in what the campaign and its spokespeople have to say. Elsewhere on their

“About” page they state that:

We propose to campaign to support the development of ethical technologies that reflect human principles of dignity, mutuality and freedom.

And that they wish:

To encourage computer scientists and roboticists to examine their own conscience when asked to provide code, hardware or ideas to develop this field.

Throughout

the position paper, Richardson also makes clear that it is the fact that current sex robot proposals are modeled on a ‘prostitute-john’ relationship that bothers her. This suggests that if sex robots could embody an alternative and more egalitarian relationship she might not be so opposed.

On top of all this, Richardson appears to have disowned the more restrictive attitude in her recent statements. In an article about her appearance at the Web Summit,

she is reported to have said we should “think about what it means” to create sex robots, not that we shouldn’t make them at all. That said, in the very same article she is reported to have called for a “ban” on sex robots. Maybe the journalist is being inaccurate in the summary (I wasn’t at the event) or maybe this reflects some genuine ambiguity on Richardson’s part. Either way, it seems problematic to me.

Why? Because I think the Campaign Against Sex Robots is currently trading on an equivocation about its core policy aims. Its branding as a general campaign “against” sex robots, along with the more unqualified objections to their development, seem to suggest that the core aim is to completely ban sex robots of all kinds. This provides juicy fodder for the media, but would require a very strong set of arguments in defence. As I hope to make clear below, I don’t think that the proponents of the campaign have met that high standard. On the other hand, the more reserved and implicitly qualified claims seem to suggest a more modest aim: to encourage creators of sex robots to think more clearly about the ethical risks associated with their development, in particular the impact it could have on gender inequality and objectification. This strikes me as a reasonably unobjectionable aim, one that would not require such strong arguments in defence, but would not be anywhere near as interesting. There are many people who already share this modest aim, and I think most people would not need much to be persuaded of its wisdom. But then the campaign would need to be more honest in its branding. It would need to be renamed something like “The Campaign for Ethical Sex Robots’.

In any event, until the Campaign provides more clarity about its core policy aims, it will be difficult to know what to make of it.

2. Why Campaign Against Sex Robots in the First Place?

Granting this difficulty, I nevertheless propose to evaluate the main arguments in favour of the campaign, as presented by its proponents. For this, I turn to the

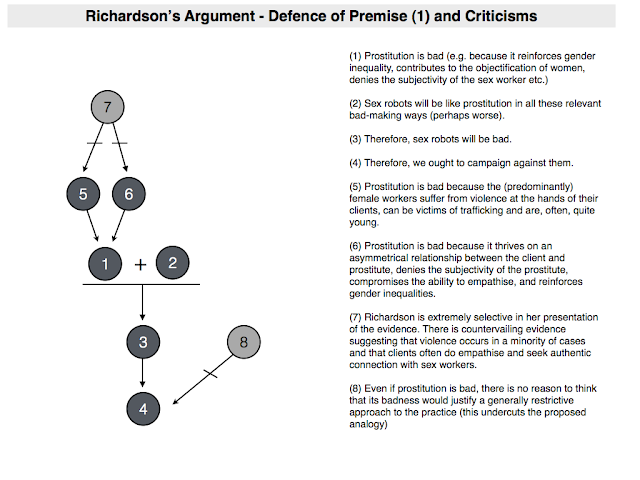

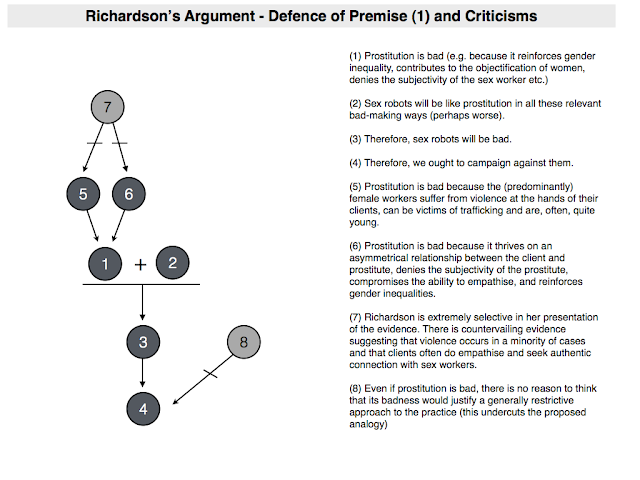

“Position Paper” on the Campaign’s website, which was written by Richardson. With the exception of its conclusion (which as I just noted is somewhat obscure) this paper does present a reasonably clear argument “against” sex robots. The argument is built around an analogy with human sex worker-client relationships (or, as Richardson prefers, ‘prostitute-john’ relationships). It is not set out explicitly anywhere in the text of the article. Here is my attempt to make its structure more explicit:

- (1) Prostitution is bad (e.g. because it reinforces gender inequality, contributes to the objectification of women, denies the subjectivity of the sex worker etc.)

- (2) Sex robots will be like prostitution in all these relevant bad-making ways (perhaps worse).

- (3) Therefore, sex robots will be bad.

- (4) Therefore, we ought to campaign against them.

This is an analogical argument, so it is not formally valid. I have tried to be reasonably generous in this reconstruction. My generosity comes in the vagueness of the premises and conclusions. The idea is that this vagueness allows the argument to work for either the strong or weak versions of the Campaign that I outlined above. So the first premise merely claims that there are several bad or negative features of prostitution; the second premise claims that these features will be shared by the development of sex robots; the first conclusion confirms the “badness” of sex robots; and the second conclusion is tacked on (minus a relevant supporting principle) in order to link the argument to the goals of the Campaign itself. It is left unclear what these goals actually are.

Vagueness of this sort is usually a vice, but in this context I’m hoping it will allow me to be somewhat flexible in my analysis. So in what follows I will evaluate each premise of the argument and see what kind of support they lend the conclusion(s). It will be impossible to divorce this analysis from the practical policy questions (i.e. should we campaign for regulation or criminalisation?). So I will try to evaluate the argument in relation to both strong and weak versions of the policy aims. To remove any sense of mystery from this analysis, I will state upfront that my conclusion will be that the argument is too weak to support a strong version of the campaign. It may suffice to support a weaker version, but this would have to be very modest in its aims, and even then it wouldn’t be particularly persuasive because it ignores reasons to favour the creation of sex robots and reasons to doubt the wisdom of interventionist policies.

3. Is Prostitution Bad?

Let’s start with premise (1) and the claim that prostitution is bad.

I have written several pieces about the ethics of sex work. Those pieces evaluate most of the leading objections to the legalisation/normalisation of sex work. Richardson’s article recapitulates many of these objections. It initially expresses some disapproval for the “sex work” discourse, viewing the use of terms like ‘sex work’ and ‘sex worker’ as part of an attempt to legitimate an oppressive form of labour. (I should qualify that because Richardson doesn’t write with the normative clarity of an ethicist; she is an anthropologist and the detached stance of the anthropologist is apparent at times in her paper, despite the fact that the paper and the Campaign clearly have normative aims). She then starts to identify various bad-making properties of prostitution. These include things like the prevalence of violence and human trafficking in the industry, along with reference to statistics about the relative youth of its workers (75% are between 13 and 25, according to one source that she cites).

Her main objection to prostitution, however, focuses on the asymmetrical relationship between the prostitute and the client, the highly gendered nature of the employment (predominantly women and some men providing the service for men), and the denial of subjectivity (and corresponding objectification) the commercialisation entails. To support this view, Richardson quotes from a study of consumers of prostitution, who said things like:

‘Prostitution is like masturbating without having to use your hand’,

‘It’s like renting a girlfriend or wife. You get to choose like a catalogue’,

‘I feel sorry for these girls but this is what I want’

(Farley et al 2009)

Each of these views seems to reinforce the notion that the sex worker is being treated as little more than an object and that their subjectivity is being denied. The client and his needs are all that matters. What’s happening here, according to Richardson, is that the client is elevating his status and failing to empathise with the prostitute: substituting his fantasies for her real feelings. This is a big problem. The failure or inability to empathise is often associated with higher rates of crime and violence. She cites

Baron-Cohen’s work on empathy and evil in support of this view.

To sum up, we seem to have two main criticisms of prostitution in Richardson’s article:

- (5) Prostitution is bad because the (predominantly) female workers suffer from violence at the hands of their clients, can be victims of trafficking and are, often, quite young.

- (6) Prostitution is bad because it thrives on an asymmetrical relationship between the client and prostitute, denies the subjectivity of the prostitute, compromises the ability of the client to empathise, and reinforces gender inequalities.

Are these criticisms any good? I have my doubts. Two points jump out at me. First, I think Richardson is being extremely selective and biased in her treatment of the evidence in relation to prostitutes and their clients. Second, even if she is right about these bad-making properties, there is no direct line from these properties to the appropriate policy response. In particular, there is no direct line from these properties to the criminalisation or restriction of prostitution. Let me briefly expand on these points.

On the first point, Richardson does cite evidence supporting the view that violence and trafficking are common in the sex work industry, and that clients deny the subjectivity of sex workers. But she ignores countervailing evidence. I don’t want to get too embroiled in weighing the empirical evidence. This is a complex debate, and there are certainly many negative features of the sex work industry. All I would say is that things are not as unremittingly awful as Richardson seems to suggest. Sanders, O’Neill and Pitcher, in their book

Prostitution: Sex Work, Policy and Politics offer a more nuanced summary of the empirical literature. For instance, in relation to violence within the industry, they note that while the incidence is “high” and probably under-reported, it tends to be more prevalent for street-based sex workers, and that violence is usually associated with a minority of clients:

While clients are the most commonly reported perpetrators of violence against female sex workers, Kinnell (2006a) suggests that a minority of clients commit violence against sex workers and that often men who attack or murder sex workers frequently have a past history of violence against sex workers and other women….It must be remembered that the majority of commercial transactions take place without violence or incidence.

(Sanders et al 2009, 44)

On the lack of empathy and the denial subjectivity, they offer a similarly nuanced view. First, they note how a highly conservative view of sexuality is often embedded in critiques of sex work:

There is generally a taboo about the types of sex involved in a commercial contact. The idea of time-limited, unemotional sex between strangers is what is often conjured up when commercial sex is imagined… The ‘seedy’ idea of commercial sex preserves the notion that only emotional, intimate sex can be found in long-term conventional relationships, and that other forms of sex (casual, group, masturbatory, BDSM, etc.) are unsatisfying, abnormal and also immoral.

(Sanders et al 2009, 83)

They then go on to paint a complex picture of the attitude of clients toward sex workers:

[T]he argument is that general understandings of sex work and prostitution are based on false dichotomies that distinguish commercial sexual relationships as dissonant from non-commercial ones. Sanders (2008b) shows that there is mutual respect and understanding between regular clients and sex workers, dispelling the myth that all interactions between sex workers and clients are emotionless. There is ample counter-evidence (such as Bernstein 2001, 2007) that indicates that clients are ‘average’ men without any particular or peculiar characteristics and increasingly seeking ‘authenticity’, intimacy and mutuality rather that trying to fulfil any mythology of violent, non-consensual sex.

(Sanders et al 2009, 84).

I cite this not to paint a rosy and pollyannish view of sex work. Far from it. I merely cite it to highlight the need for greater nuance than Richardson seems willing to provide. It is simply not true that all forms of prostitution involve the troubling features she identifies. Furthermore, in relation to an issue like trafficking, while I would agree that certain forms of trafficking are unremittingly awful, there is still a need for nuance. Trafficking-related statistics sometimes conflate general illegal labour migration (i.e. workers moving for better opportunities) with the stereotypical view of trafficking as a modern form of slavery.

This brings me to the second criticism. Even if Richardson is right about the bad-making properties of prostitution, there is no reason to think that those properties are sufficient to warrant criminalisation or any other highly restrictive policy. For instance, denials of subjectivity and asymmetries of power are rife throughout the capitalistic workplace. Many of the consumer products we buy are made possible by, arguably, exploitative international trade networks. And many service workers in our economies have their subjectivity denied by their clients. I often fail to care about the feelings of the barista making my morning coffee. But in these cases we typically do not favour criminalisation or restriction. At most, we favour a change in regulation and behaviour. Likewise, many of the negative features of prostitution could be caused (or worsened) by its criminalisation. This is arguably true of violence and trafficking. It is because sex workers are criminalised that they fail to obtain the protections afforded to most workers and fail to report what happens to them. This is why many sex worker activists — who are in no way unrealistic about the negative features of the job — favour legalisation and regulation. So Richardson will need to do more than single out some negative features of prostitution to support her analogical argument. I have tried to summarise these lines of criticism in the diagram below.

In the end, however, it is not worth dwelling too much on the bad-making properties of prostitution. The analogy is important to Richardson’s argument, but it is not the badness of prostitution that matters. What matters is the claim that these properties will be shared by the development of sex robots. This is where premise (2) comes in.

4. Would the development of sex robots be bad in the same way?

Premise (2) claims that the development of sex robots will replicate and reinforce the bad-making properties of prostitution. There are two things we need to figure out in relation to this claim. The first is how it should be interpreted; the second is how it is supported.

In relation to the interpretive issue, we must ask: Is the claim that, just as the treatment and attitude toward prostitutes is bad, so too will be the treatment and attitude toward sex robots? Or is it that the development of sex robots will increase the demand for human prostitution and/or thereby encourage users of sex robots to treat more real human (females) as objects? Richardson’s paper supports the latter interpretation. At the outset, she states that her concern about sex robots is that they:

[legitimate] a dangerous mode of existence where humans can move about in relations with other humans but not recognise them as human subjects in their own right.

(Richardson 2015)

The key phrase here seems to be “in relations with other humans”, suggesting that the worry is about how we end up treating one another, not how we treat the robots themselves. This is supported in the conclusion where she states:

In this paper I have tried to show the explicit connections between prostitution and the development and imagination of human-sex robot relations. I propose that extending relations of prostitution into machines is neither ethical, nor is it safe. If anything the development of sex robots will further reinforce relations of power that do not recognise both parties as human subjects.

(Richardson 2015)

Again, the emphasis in this quote seems to be on how the development of sex robots will affect inter-human relationships. Let’s reflect this in a modified version of premise (2):

- (2*) Sex robots will add to and reinforce the bad-making properties of prostitution (i.e. they will encourage us to treat one another with a lack of empathy and exacerbate existing gender/power inequalities).

How exactly is this supported? As best I can tell, Richardson supports it by referring to the work of David Levy and then responding to a number of counter-arguments. In his book

Love and Sex with Robots, David Levy drew explicit parallels between the development of sex robots and prostitution. The idea being that the relationship between a user and his/her sex robot would be akin to the relationship between a client and a prostitute. Levy was quite explicit about this and spent a good part of his book looking at the motivations of those who purchase sex and how those motivations might transfer onto sex robots. He was reasonably nuanced in his discussion of this literature, though you wouldn’t be able to tell this from Richardson’s article (for those who are interested,

I’ve analysed Levy’s work previously). In any event, the inference Richardson draws from this is that the development of sex robots is proceeding along the lines that Levy imagines and hence we should be concerned about its potential to reinforce the bad-making properties of prostitution.

- (9) Levy models the development of sex robots on the relationship between clients and prostitutes, therefore it is likely that the development of such robots will add to and reinforce the bad-making properties of prostitution.

I have to say I find this to be a weak argument, but I’ll get back to that later because Richardson isn’t quite finished with the defence of her view. She recognises that there are at least two major criticisms of her claim. The first holds that if robots are not persons (and for now we will assume that they are not) then there is nothing wrong with treating them as objects/things which we can use for our own pleasure. In other words, the technology is a morally neutral domain in which we can act out our fantasies. The second criticism points to the potentially cathartic effect of these technologies. If people act out negative or violent sexual fantasies on a robot, they might be less inclined to do so to a real human being. Sex robots may consequently help to prevent the bad things that Richardson worries about.

- (10) Sex robots are not persons; they are things: it is appropriate for us to treat them as things (i.e. the technology is a morally neutral domain for acting out our sexual fantasies)

- (11) Use of sex robots could be cathartic, e.g. using the technology to act out negative or violent sexual fantasies might stop people from doing the same thing to a real human being.

Richardson has responses to both of these criticisms. In the first instance, she believes that technology is not a value-neutral domain. Our culture and our norms are reflected in our technology. So we should be worried about how cultural meaning gets incorporated into our technology. Furthermore, she has serious doubts about the catharsis argument. She points to the historical relationship between pornography and prostitution. Pornography has now become widely available, but this has not led to a corresponding decline in prostitution nor, in the case of child pornography, abuse of real children. On the contrary, prostitution actually appears to have increased while pornography has increased. The same appears to be true of the relationship between sex toys/dolls and prostitution:

The arguments that sex robots will provide artificial sexual substitutes and reduce the purchase of sex by buyers is not borne out by evidence. There are numerous sexual artificial substitutes already available, RealDolls, vibrators, blow-up dolls etc., If an artificial substitute reduced the need to buy sex, there would be a reduction in prostitution but no such correlation is found.

(Richardson 2015)

In other words:

- (12) Technology is not a morally neutral domain: societal values and ethics are inflected in our technologies.

- (13) There is no evidence to suggest that the cathartic argument is correct: prostitution has not decreased in response to the increased availability of pornography and/or sex toys.

Is this a robust defence of premise (2)? Does it support the overall argument Richardson wishes to make? Once again, I have my doubts. Some of the evidence she adduces is weak and even if it is correct it in no way supports a strongly restrictive approach to the development of sex robots. At best, it supports a regulative approach. Furthermore, in adopting that more regulative approach we need to be sensitive to both the merits and demerits of this technology and the costs proposed regulative strategy. This is something that Richardson neglects because she focuses almost entirely on the negative. In this vein, let me offer five responses to her argument, some of which target her support of premise (2*), others of which target the relationship between any putative bad-making properties of sex robots and the need for a ‘campaign’ against them.

First, I think Richardson’s primary support for premise (2) - viz. that it is reflected in the model of sex robot development used by David Levy — is weak. True, Levy is a pioneer in this field and may have a degree of influence (I cannot say for sure). But that doesn’t mean that all sex robot developers have to adopt his model. If we are worried about the relationship between the sex robot user and the robot, we can try to introduce standards and regulations that reflect a more positive set of sexual norms. For instance, the makers of Roxxxy (billed as the world’s first sex robot) claim to

include a personality setting called ‘Frigid Farah’ with their robot. Frigid Farah will demonstrate some reluctance to the user’s sexual advances. You could argue that this reflects a troubling view of sexual consent: that resistance is not taken seriously (i.e. that ‘no’ doesn’t really mean ‘no’). But you could try to regulate against this and insist that every sex robot be required to give positive, affirmative signals of consent. This might reflect and reinforce a more desirable attitude toward sexual consent. And this is just an illustration of the broader point: that sex robots need not reflect negative social attitudes toward sex. We could demand and enforce a more positive set of attitudes. Maybe this is all Richardson really wants her campaign to achieve, i.e. to change the models adopted in the development of sex robots. But in that case, she is not really campaigning against them, she is campaigning for a better version of them.

Second, I think it is difficult to make good claims about the likely link between the use of a future technology like sex robots and actions toward real human beings. In this light, I find her point about the correlation between pornography and an increase in prostitution relatively unpersuasive. Unlike her, I don’t believe sex work is unremittingly bad and so I am not immediately worried about this correlation. What would be more persuasive to me is whether there was some correlation (and ultimately some causal link) between the increase in pornography/prostitution and the mistreatment of sex workers. I don’t know what the evidence is on that, but I think there is some reason to doubt it. Again, Sanders et al discuss ways in which the mainstreaming and legalisation of prostitution is sometimes associated with a decrease in mistreatment, particularly violence. This might give some reason for optimism.

A better case study for Richardson’s argument would probably be the debate about the link between pornography (adult hardcore or child) and real-world sexual violence/assault (toward adults or children). If it can be shown that exposure to pornography increases real-world sexual assault, then maybe we do have reason to worry about sex robots. But what does that evidence currently say?

I reviewed the empirical literature in my article on robotic rape and robotic child sexual abuse. I concluded that the evidence at the moment is relatively ambiguous. Some studies show an increase; some show a decrease; and some are neutral. I speculated that we may be landed in a similarly ambiguous position when it comes to evidence concerning a link between sex robot usage and real-world sexual assault. That said, I also speculated that sex robots may be quite different to pornography: there may be a more robust real-world effect from using a sex robot. It is simply too early and too difficult to tell. Either way, I don’t see anything in this to support Richardson’s moral panic.

Third, if the evidence in relation to sex robot usage does end up being ambiguous, then I suspect the best way to argue against the development of sex robots is to focus on the

symbolic meaning that attaches to their use. Richardson doesn’t seem to make this argument (though there are hints). I explored it in my paper on robotic rape and robotic child sexual abuse, and

others have explored it in relation to video games and fiction. The idea would be that a person who derives pleasure from having sex with a robot displays a disturbing moral insensitivity to the symbolic meaning of their act, and this may reflect negatively on their moral character. I suggested that this might be true for people who derive sexual pleasure from robots that are shaped like children or that cater to rape fantasies. The problem here is not to do with the possible downstream, real-world consequences of this insensitivity. The problem has to do with the act itself. In other words, the argument is about the intrinsic properties of the act; not its extrinsic, consequential properties. This is a better argument because it doesn’t force one to speculate about the likely effects of a technology on future behaviour. But this argument is quite limited. I think it would, at best, apply to a limited subset of sex robot usages, and probably would not warrant a ban or, indeed, campaign against any and all sex robots.

Fourth, when thinking about the appropriate policy toward sex robots, it is important that we weigh the good against the bad. Richardson seems to ignore this point. Apart from her references to the catharsis argument, she nowhere mentions the possible good that could be done by sex robots. My colleague

Neil McArthur has looked into some of these possibilities. There are several arguments that could be made. There is the simple hedonistic argument: sex robots provide people with a way of achieving pleasurable states of consciousness. There is the distributive argument: for whatever reason, there are people in the world today who lack access to certain types of sexual experience, sex robots could make those experiences (or, at least, close approximations of them) available to such people. This type of argument has been made in relation to the value of sex workers for persons with disabilities.

Indeed, there are charities set up that try to link persons with disabilities to sex workers for this very reason. There is also the argument that sex robots could ameliorate imbalances in sex drive between the partners in existing relationships; or could add some diversity to the sex lives of such couples, without involving third parties (and the potential interpersonal strife to which they could give rise). It could also be the case that sex robots allow for particular forms of sexual self-expression to flourish, and so, in the interests of basic sexual freedom, we should permit it. Finally, unlike Richardson, we shouldn’t completely discount the possibility of sex robots reducing other forms of sexual harm. This is by no means an exhaustive list of positive attributes. It simply highlights the fact that there is some potential good to the technology and this must be weighed against any putative negative features when determining the appropriate policy.

Fifth, and finally, when thinking about the appropriate policy you also need to think about the potential costs of that policy. We might agree that there are bad-making properties to sex robots, but it could be that any proposed regulatory intervention would do more harm than good. I can see plausible ways in which this could be true for regulatory interventions into sex robots. Regulation of pornography, for instance, has historically involved greater restrictions toward pornography from sexual minorities (e.g. gay and lesbian porn). Regulatory intervention into sex robots may end up doing the same. I think it is particularly important to bear this in mind in light of

Sanders et al’s comments about stereotypical views of unemotional commercialised sex feeding into prohibitive policies. It may also be the case that policing the development and use of sex robots requires significant resources and significant intrusions into our private lives. I’m not sure that we should want to bear those costs. Less instrusive regulatory policies — e.g. one that just encourage manufacturers to avoid problematic stereotypes or norms in the construction of sex robots — might be more tolerable. Again, maybe that’s all Richardson wants. But she needs to make that clear and to avoid simply emphasising the negative.

5. Conclusion

This post has been long. To sum up, I find the Campaign Against Sex Robots puzzling and problematic. I do so for three main reasons:

A. I think the current fanfare associated with the Campaign stems from its own equivocation regarding its core policy aims. Some of the statements by its members, as well as the name of the campaign itself, suggest a generalised campaign against all forms of sex robots. This is interesting from a media perspective, but difficult to defend. Some other statements suggest a desire for more ethical awareness in the creation of sex robots. This seems unobjectionable, but a lot less interesting and in need of far more nuance. It would also necessitate some re-branding of the Campaign (e.g. to ‘The Campaign for Ethical Sex Robots”).

B. The first premise of the argument in favour of the campaign focuses on the bad-making properties of prostitution. But this premise is flawed because it fails to factor in countervailing evidence about the experiences of sex workers and the attitudes of their clients, and because, even if it were true, it would not support a generalised campaign against sex work. Indeed, sex worker activists often argue the reverse: that the bad-making properties of prostitution are partly a result of its criminalisation and restriction, and not intrinsic to the practice itself.

C. The second premise of the argument focuses on how the bad-making properties of prostitution might carry over to the development of sex robots. But this premise is flawed for several reasons: (i) it is supported by reference to the work of one sex robot theorist and there is no reason why his view must dominate the development process; (ii) it relies on dubious claims about the likely causal link between the use of sex robots and the treatment of human beings; (iii) it fails to make the strongest argument in support of a restrictive attitude toward sex robots (the symbolic meaning argument), but even if it did, that argument would be limited and would not lend support to a general campaign; (iv) it fails to consider the possible good-making properties of sex robots; and (v) it fails to consider the possible costs of regulatory intervention.

None of this is to suggest that we shouldn’t think carefully about the ethics of sex robots. We should. But the Campaign Against Sex Robots does not seem to be contributing much to the current discussion.