(Previous Entry)

Inequality is now a major topic of concern. Only those with their heads firmly buried in the sand would have failed to notice the rising chorus of concern about wealth inequality over the past couple of years. From the economic tomes of Thomas Piketty and Tony Atkinson, to the battle-cries of the 99%, and on to the political successes of Jeremy Corbyn in the UK and Bernie Sanders in the US, the notion that inequality is a serious social and political problem seems to have captured the popular imagination.

In the midst of all this, a standard narrative has emerged. We were all fooled by the triumphs of capitalism in the 20th century. The middle part of the 20th century — from roughly the end of WWII to 1980 — saw significant economic growth and noticeable reductions in inequality. We thought this could last forever: that growth and equality could go hand in hand. But this was an aberration. Since 1980 the trend has reversed. We are now returning to levels of inequality not seen since the late 19th century. The 1% of the 1% is gaining an increasing share of the wealth.

What role does technology have to play in this standard narrative? No doubt, there are lots of potential explanations of the recent trend, but many economists agree that technology has played a crucial role. This is true even of economists who are sceptical of the more alarmist claims about robots and unemployment. David Autor is one such economist. As I noted in my previous entry, Autor is sceptical of authors like Brynjolfsson and McAfee who predict an increase in automation-induced structural unemployment. But he is not sceptical about the dramatic effects of automation on employment patterns and income distribution.

In fact, Autor argues that automating technologies have led to a polarisation effect — actually, two polarisation effects. These can be characterised in the following manner:

Occupational Polarisation Effect: Growth in automating technologies has facilitated the polarisation of the labour market, such that people are increasingly being split between to two main categories of work: (i) manual and (ii) abstract.

Wage Polarisation Effect: For a variety of reasons, and contrary to some theoretical predictions, this occupational polarisation effect has also led to an increase in wage inequality.

I want to look at Autor’s arguments for both effects in the remainder of this post.

1. Is there an occupational polarisation effect?

The evidence for an occupational polarisation effect is reasonably compelling. To appreciate it, and to understand why it has happened, we need to consider the different types of work that people engage in, and the major technological changes over the past 30 years.

Work is a complex and multifaceted phenomenon. Any attempt to reduce it to a few simple categories will do violence to the complexity of the real world. But we have to engage in some simplifying categorisations to make sense of things. To that end, Autor thinks we can distinguish between three main categories of work in modern industrial societies:

Routine Work: This consists in tasks that can be codified and reduced to a series of step-by-step rules or procedures. Such tasks are ‘characteristic of many middle-skilled cognitive and manual activities: for example, the mathematical calculations involved in simple bookkeeping; the retrieving, sorting and storing of structured information typical of clerical work; and the precise executing of a repetitive physical operation in an unchanging environment as in repetitive production tasks’ (Autor 2015, 11).

Abstract Work: This consists in tasks that ‘require problem-solving capabilities, intuition, creativity and persuasion’. Such tasks are characteristic of ‘professional, technical, and managerial occupations’ which ‘employ workers with high levels of education and analytical capability’ placing ‘a premium on inductive reasoning, communications ability, and expert mastery’ (Autor 2015, 12).

Manual Work: This consists in tasks ‘requiring situational adaptability, visual and language recognition, and in-person interactions’. Such tasks are characteristic of ‘food preparation and serving jobs, cleaning and janitorial work, grounds cleaning and maintenance, in-person health assistance by home health aides, and numerous jobs in security and protective services.’ These jobs employ people ‘who are physically adept, and, in some cases, able to communicate fluently in spoken language’ but would generally be classified as ‘low-skilled’ (Autor 2015, 12).

This threefold division makes sense. I certainly find it instructive to classify myself along these lines. I may be wrong, but think it would be fair to classify myself (an academic) as an abstract worker, insofar as the primary tasks within my job (research and teaching) require problem-solving ability, creativity and persuasion, though there are certainly aspects of my job that involve routine and manual tasks too. But this simply helps to underscore one of Autor’s other points: most work processes are made up of multiple, often complementary, inputs, even when one particular class of inputs tends to dominate.

This threefold division helps to shine light on the polarising effect of technology over the past thirty years. The major growth area in technology over that period of time has been in computerisation and information technology. Indeed, the growth in that sector has been truly astounding (exponential in certain respects). We would expect such astronomical growth to have some effect on employment patterns, but that effect would depend on the answer to a critical question: what it is that computers are good at?

The answer, of course, is that computers are good at performing routine tasks. Computerised systems run on algorithms, which are encoded step-by-step instructions for taking an input and producing an output. Growth in the sophistication of such systems, and reductions in their cost, create huge incentives for businesses to use computerised systems to replace routine workers. Since those workers (e.g. manufacturers, clerical and admin staff) traditionally represented the middle-skill level of the labour market, the net result has been a polarisation effect. People are forced into either manual (low-skill) or abstract (high skill) work. Now, the big question is whether automation will eventually displace workers in those categories too, but to date manual and abstract work have remained difficult to automate, hence the polarisation.

As I said at the outset, the evidence for this occupational polarisation effect is reasonably compelling. The diagram below, taken directly from Autor’s article, illustrates the effect in the US labour market from the late 1970s up to 2012. It depicts the percentage change in employment across ten different categories of work. The three categories on the left represent manual work, the three in the middle represent routine work, and the four on the right represent abstract work. As you can see, growth in routine work has either been minimal (bearing in mind the population increase) or negative, whereas growth in abstract and manual work has been much higher (though there have been some recent reversals, probably due to the Great Recession, and maybe due to other recent advances in automating technologies, though this is less certain).

|

| (Source: Autor 2015, 13) |

Similar evidence is available for a polarisation effect in EU countries, but I’ll leave you read Autor’s article for that.

2. Has this led to increased wage inequality?

Increasing polarisation with respect to the types of work that we do need not lead to an increase in wage inequality. Indeed, certain theoretical assumptions might lead us to predict otherwise. As discussed in a previous post, increased levels of automation can sometimes give rise to a complementarity effect. This happens when the gains from automation in one type of work process also translate into gains for workers engaged in complementary types of work. So, for instance, automation of manufacturing processes might increase demand for skilled maintenance workers, which should technically increase the price they can obtain for their labour. This means that even if the labour-force has bifurcated into two main categories of work — one of which is traditionally classed as low-skill and the other of which is traditionally classed as high-skill — it does not follow that we would necessarily see an increase in income inequality. On the contrary, both categories of workers might be expected to see an increase in income.

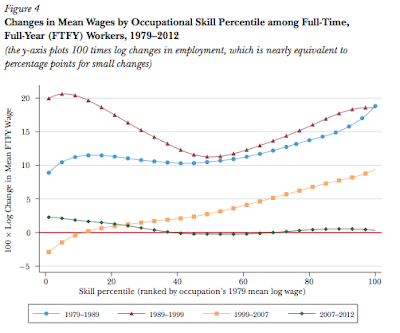

But this theoretical argument depends on a crucial ‘all else being equal’-clause. In this respect it has good company: many economic arguments depends on such clauses. The reality is that all else is not equal. Abstract and manual workers have not seen complementary gains in income. On the contrary: the evidence we have seems to suggest that abstract workers have seen consistent increases in income, while manual workers have not. The evidence here is more nuanced. Consider the diagram below.

|

| (Source: Autor 2015, 18) |

This diagram requires a lot of interpretation. It is fully explained in Autor’s article; I can only offer a quick summary. Roughly, it depicts the changes in mean wages among US-workers between 1979-2012, relative to their occupational skill level. The four curves represent different periods of time: 1979-1989, 1989-1999 etc. The horizontal axis represents the skill level. The vertical axis represents the changes in mean wages. And the baseline (0) is set by reference to mean wages in 1979. What the diagram tells us is that mean wages have, in effect, consistently increased for high skill workers (i.e. those in abstract jobs). We know this because the right-hand portion of each curve trends upwards (excepting the period covering the great recession). It also tells us that low-skill workers (the left-hand portions of the curves) saw increases in the 1980s and 1990s, followed by low-to-negative changes in the 2000s. This is despite the fact that the number of workers in those categories increased quite dramatically in the 2000s (the earlier diagram illustrates this).

As I said, the evidence here is more nuanced, but it does point to a wage polarisation effect. It is worth understanding why this has happened. Autor suggests that three factors have contributed to it:

Complementarity effects of information technology benefit abstract workers more than manual workers: As defined above, abstract work is analytical, problem-solving, creative and persuasive. Most abstract workers rely heavily on ‘large bodies of constantly evolving expertise: for example, medical knowledge, legal precedents, sales data, financial analysis’ and so on (Autor 2015, 15). Computerisation greatly facilitates are ability to access such bodies of knowledge. Consequently, the dramatic advances in computerisation have strongly complemented the tasks being performed by abstract workers (though I would note it has also forced abstract workers to perform more and more of their own routine administrative tasks).

Demand for the outputs abstract workers seems to be relatively elastic: Elasticity is a measure of how responsive some economic variable (demand/supply) is to changes in other variables (e.g. price). If demand for abstract work were inelastic, then we would not expect advances in computerisation to fuel significant increases in the numbers of abstract workers. But in fact we see the opposite. Demand for such workers has gone up. Autor suggests that healthcare workers are the best examples of this: demand for healthcare workers has increased despite significant advances in healthcare-related technologies.

There are greater barriers to entry into the labour market for abstract work: This is an obvious one, but worth stressing. Most abstract work requires high levels of education, training and credentialing (for both good and bad reasons). It is not all that easy for displaced workers to transition into those types of work. Conversely, manual work tends not to require high levels of education and training. It is relatively easy for displaced workers to transition to these types of work. The result is an over-supply of manual labour, which depresses wages.

The bottom line is this: abstract workers have tended to benefit from the displacement of routine work with higher wages; manual workers have not. The net result is a wage polarisation effect.

3. Conclusion

I don’t have too much to say about this except to stress its importance. There has been a lot of hype and media interest in the ‘rise of the robots’. This hype and interest has often been conveyed through alarmist headlines like ‘the robots are coming for our jobs’ and so on. While this is interesting, and worthy of scrutiny, it is not the only interesting or important thing. Even if technology does not lead to a long-term reduction in the number of jobs, it may nevertheless have a significant impact on employment patterns and income distribution. The evidence presented by Autor bears this out.

One final point before I wrap up. It is worth bearing in mind that the polarisation effects described in this post are only concerned with types of work and wage inequalities affected by technology. Wage and wealth inequality are much broader phenomena and have been exacerbated by other factors. I would recommend reading Piketty or Atkinson for more information about these broader phenomena.